3D graphics are so widely spread in the modern world that anyone can get into it on their personal computers using free software. That’s why there are many misconceptions about how professional 3D art is created and the workflow surrounding it. Many people think that you can create a model, put some materials and textures on it, and get a beautiful image instantly. However rendering a picture is a completely different process compared to modeling. So what is 3D rendering exactly and why is it such an important part of creating CG content?

Visualizing 3D as 2D

Every 3D object is just an array of coordinates for vertices stored in the computer’s memory. These coordinates can be absolute or relative; the latter means only the pivot point has full coordinates and each vertex is defined by how far it is from the pivot on every axis (X, Y, or Z). Interpreting this array of digits and transforming it into something a human eye can see as an image is called 3D rendering. Basically, the computer is doing the same task that our brain is doing when it receives information from our eyes.

The main task of rendering is determining what will be visible to the viewer and how exactly it will look like. In orthographic view, the task is a bit easier because the objects don’t change size and shape with distance. In perspective view, additional calculations are required to determine how big the objects will be on the screen. Some objects may appear as dots or become invisible because they are located behind the far end limit which is set to prevent infinite calculations.

|

|

|

|

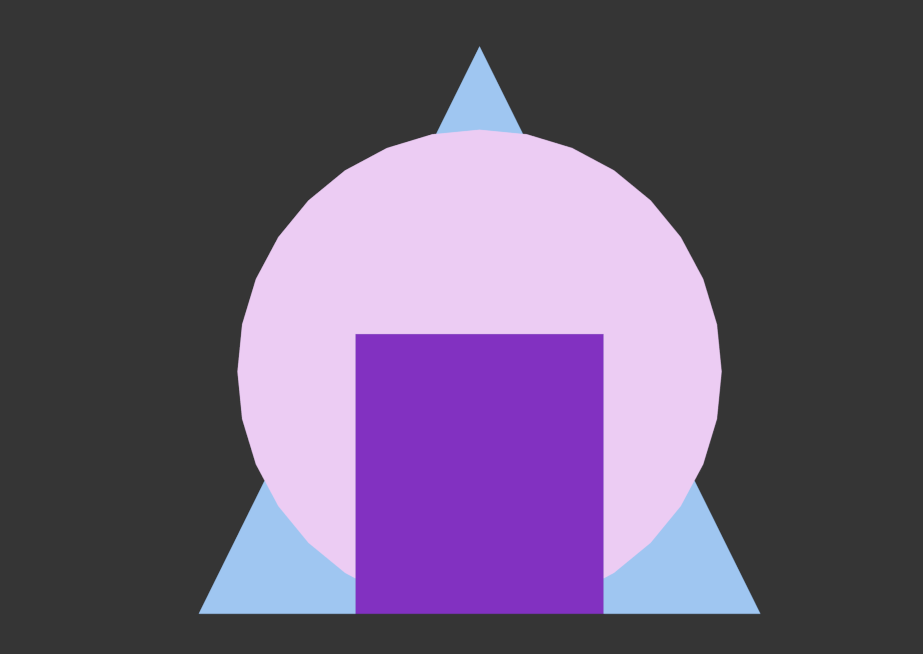

In orthographic view the objects’ sizes stay the same no matter how far from the camera. When in perspective, a cone and a sphere appear much smaller in relation to a box. |

|

After that the rendering algorithm goes pixel by pixel and calculates its color depending on the object present there, its material, light sources, and shadows. Depending on the required realism, the power of hardware available, and complexity of the algorithm, rendering an image can take from fractions of a second to hours and even days. 3DS MAX wireframe rendering is one of the fastest options.

Ray tracing and rasterization

There are currently two main ways of creating a 2D image from 3D scenes: ray tracing and rasterization. Rasterization has been there from the first days of computer generated graphics. The computer divides all the objects in a scene into triangles, calculates their positions on the screen, overlays a net of pixels over it and then decides what color each pixel will be depending on the material and transparency. This algorithm is good when you need to clearly see everything in your scene and understand what is in front and what is behind. The viewports in 3D software use rasterization to display the created geometry. However, when it comes to lighting and shadows, rasterization often shows the approximate “guesses” of effects, and the result won’t look realistic. There are many additional algorithms that add to realism, and it is possible to create stunning images with rasterization, but it takes much more time to prepare the scene prior to rendering.

The ray tracing technique aims to create physically correct images. It imitates how our brain builds pictures for us: light rays hit surfaces and get reflected from them, depending on the material. It changes the properties of the ray, so when our eye catches it, it will be perceived to be of a particular color. Commonly, ray tracers go in a reverse direction: rays are shot out of the camera (our viewpoint) towards the objects. When a ray hits a surface, it bounces (or goes through if the surface is transparent) and creates several other rays. These new rays hit other surfaces and bounce and so on until it reaches a light source. Then the final ray takes the properties of this light source and returns this information down the line, calculating the color of each bounce point. Ray tracing, a recursive algorithm, leads to a massive amount of calculations being done at once, so the amount of new rays each bounce creates is usually limited. Developments in hardware processing power allow ray tracing renderers to create visuals indiscernible from reality; they are widely used in movies and architectural visualization.

|

|

|

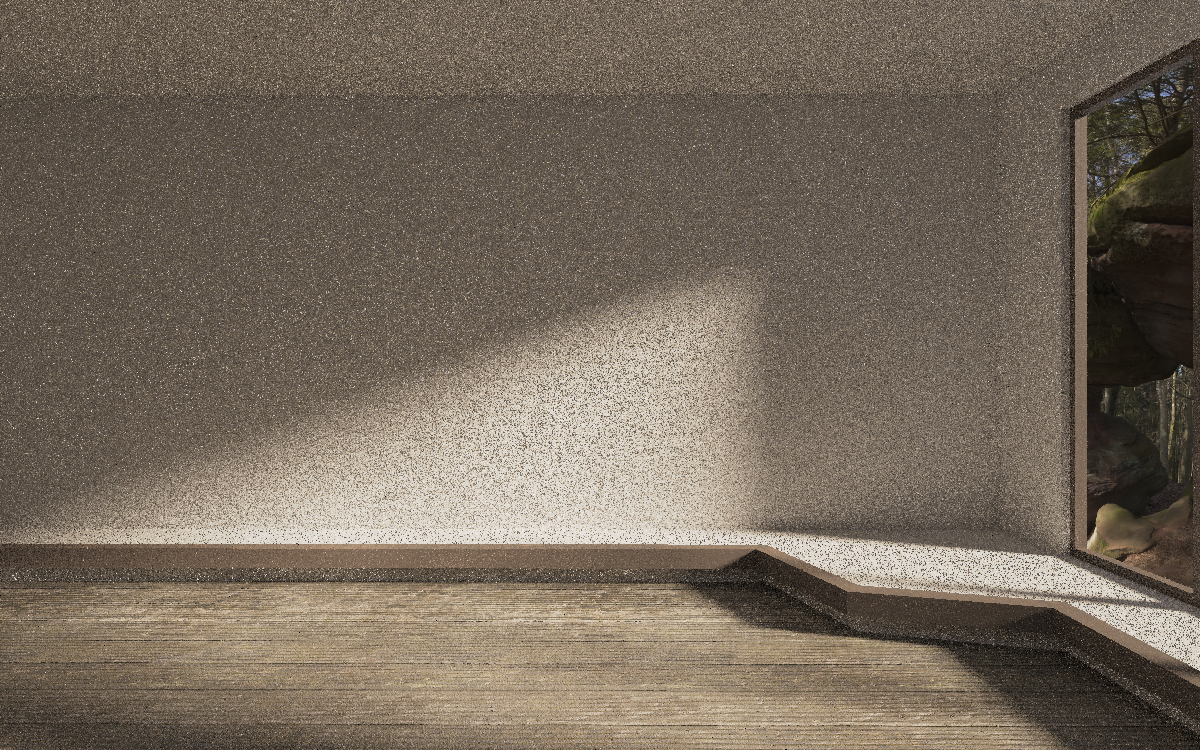

Corona Renderer is a ray tracing engine. At the beginning of rendering, the image appears very noisy. As time passes, the color of the pixels is gradually calculated, and the noise disappears. |

Both ray tracing and rasterization techniques are currently used in computer graphics, and the choice between the two comes from answering the question: how fast do you need the result?

Realtime vs. production rendering

Ray tracing requires so much processing power that even the top-tier processors can’t perform it fast. Creating photorealistic images for movies and 3D cartoons takes time and is done on linked together servers called render farms. This way, the calculations that take months on a single processor can be complete in hours. Of course, single images render much faster and take several minutes or hours on a personal computer depending on the GPU or CPU.

Learn more about how render farms work here - https://megarender.com/render-farm/

However, in video games and interactive media, waiting even a few seconds for an image is too much. The minimum frame rate for comfortable gaming is 60 frames per second. And so, rasterization rendering becomes the primary technique. To make sure their games run smoothly and look fantastic, game developers optimize their models, textures, and overall scenes as much as possible. The majority of lights and shadows get baked (pre-calculated and used during rendering, no matter the user's input). Some effects are faked instead of being calculated. Compressed versions of textures are used for small and distant objects. Altogether, it leads to realistic graphics at high speeds. In the past few years, developing technologies introduced ray tracing into game engines, but it is used to calculate only lighting effects. Perhaps, in another decade all the modern games will be played using ray tracing rendering.

GPU and CPU rendering

Realtime rendering is possible thanks to the power of modern GPUs. However, rendering can also be done on CPUs when there is no tight time limit. Traditionally, heavy rendering has been done on CPUs, and it benefits greatly from the amount of processing cores and threads. CPU uses RAM to load all the needed assets, and RAM’s frequency affects the rendering time. It is also easy to link together several cheaper and less powerful CPUs to render a task together.

Modern GPUs can do the same work even faster because of the amount of cores and threads they have. They also use specialized inner VRAM for loading assets, which works much faster than traditional RAM; but it is also very limited: GPU VRAM cannot be expanded, and some resource-demanding scenes are too large for it to handle. These scenes need to be optimized before GPU rendering, although it still takes less time than rendering on a CPU. With advancements in software and hardware development, GPUs take up a larger and larger portion of the market each year.

|

|

|

Rendering engines are usually developed to use either GPU or CPU. However, engines like V-Ray and Cycles can use either. |

Currently, GPUs are much more expensive than CPUs due to the spread of cryptomining. Not many render engines support the use of several weaker GPUs linked together, and such a network won’t be able to unify VRAM. CPUs work better when linked together in a render farm. Additionally, many VFX and animation studios already include rendering times into their workflow, making CPU rendering more cost-effective.

If your computer struggles with rendering, you can still produce large beautiful images by rendering in a cloud. At Megarender, we provide access to AMD Threadripper 3990X servers, the most powerful CPUs on the modern market. They can easily handle big scenes with high image resolution and provide fast results. Megarender is a 3D render farm just for your project!